I’m finally back in the world of games development after a long sojourn selling my soul for cold, hard cash in the world of active directory and corporate software. I’ve managed to pick up development of my multiplayer WebGL game and push it to some form of completion too (It’ll be up later this week at an un-specified location pending load tests).

That’s the commit history for the project, starting off as an exercise in trying to do some TDD with some basic WebGL code to somehow ending up building a game of hovercraft, neon-blue grids, and explosions (I’m not good with assets, so making everything glow seems like the cheapest way to make it pretty).

What has been interesting throughout this project (for me) has been the at times subconscious attempts to carry on ‘doing things the way I do it in most of my day to day software’, and finding out that some of the rules don’t apply in this particular space – and some surprising ones do. (or at least, in this specific game in this specific space).

First to go, TDD

Ah yes, TDD – this is a principle I’m beginning to lose my love for in both worlds for a multitude of reasons, but when I started this project I thought I’d applying this way of working to this side of the fence . I created a walking skeleton of the basic technology I was to be using (JavaScript, a browser, WebGL, a server with NodeJS) and started to write tests for each feature as I I wanted to add them.

This started falling over pretty quickly as most of the first features required fundamental integration tasks to be carried out before writing the higher level code (manipulating the DOM, playing with WebGL, loading shaders, connecting to servers) – each of these things requiring a tiny amount of code applied in just the right order in just the right way for the particular feature. As far as feedback cycles go, it was faster to hit ctrl-f5 in a browser and watch the actual code do what it was meant to do or fail pitifully than it was to try automating the running and assertions on those problems.

In essence, these things could have been solved by heading off into a blank project and doing a formal spike, before coming back to the main project and laying out the tests for them to help with the design. It would have given little value however, as they were already-solved problems and the code would change little if I designed it from the perspective of testability or otherwise. I decided to treat the entire project as one giant spike into this domain and just roll with the punches.

Next up the behaviour itself, this is where the formal TDD approach probably would have been a bit beneficial, but I was already feeling headstrong about my initial progress and wanted to just start spitting out features – this led me down a bit of a dark alley as the code I produced wasn’t written or designed for testability and too heavily relied on there being a heap of external components being present therefore making it too expensive to automate the running of the behaviour. Funny how that happens accidentally even though I supposedly know that a de-coupled system looks like.

I actually sorted that out eventually, arriving at a good, clean, testable design when solving a different problem entirely – making it possible to write tests that looked like this:

test(“Hovercrafts can acquire targets when only one target is around”, function() {

var context = GivenAnEmptyScene()

.WithAHovercraftCalled("one")

.WithAHovercraftCalled("two")

.WhereNotPointingAtEachOther("one", "two")

.Build();

var targettingCraft = context.FindCraft("one");

var targettedCraft = context.FindCraft("two");

targettingCraft.AimAt(targettedCraft);

ok(targettingCraft.RaisedTargetAcquiredEventForCraftWithId("two"));

});

Or something along those lines anyway – note how I said “made it possible” – I found that the biggest value tests I could write were the ones describing complex interactions between entities in the scene (but ignoring external components) but because the system is relatively small and because I’m the only developer and because I was changing everything all of the time to make it more fun to play that they simply weren’t worth writing.

If I had been part of a team or the system was larger and more complex I think it would have been worth it – and the lesson on how to arrive at this point was a valuable one, I’ll be writing tests for my next game at about this level and not worrying about the fluff.

The big difference here is I guess not one of games development, but of mentality and because I’m unused to working on projects where I’m the sole owner and I’m not going to have to ship it off to another developer in six months – I think apart from the core integration code there could be a lot of value in writing tests around gameplay interactions in most games dev, in this I don’t think our two worlds differ all that much.

Messaging

Push, don’t pull – that’s the mantra in most of the software worlds I work on – send a command, forget about it, raise an event, forget about it – deal with your own problems, maintain your own consistency, and let everybody else do the same.

I messed this up big time, turns out dealing with messaging in an interactive simulation across multiple clients and a server can throw up some pretty interesting issues at times, at one point I was cursing my decision to go with a push based architecture because it was becoming such a nightmare to deal with.

Now, other than duplication of data (and there certainly is some, memory is not my bottleneck), the biggest issue was just trying to work out which event had come from where and why it arrived in the order it had and why it had made something happen that shouldn’t have happened.

My mistake, was to consider this system like any other piece of business software I have worked on – when most of the software I have worked on in the business world has been of minimal behavioural complexity. Going back to my above statement on “maintain your own consistency, and let everybody else do likewise”, I wasn’t paying anywhere near enough attention to this and ended up with inconsistency everywhere, I was capturing events at the wrong level, I was dealing with state at the wrong level – I was … well yeah, doing it wrong.

I spent a few days tidying up this mess, and formalising heavily between the different types of messages – commands being external inputs to the system telling it to do something and events being things that have happened, and anything that goes over the wire or between entities are going to be one of these things.

I switched a lot of the code so that entities that raised events also listened to those events to update their own state, and every other unit or subsystem did likewise on receiving those events, effectively turning each event-listener into a de-normalised view around its specific area of functionality.

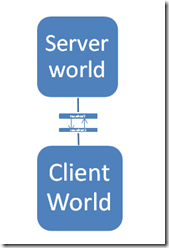

Turns out I by accident ended up with a system not unlike this:

I then when bootstrapping entities into the world would attach different components to them if they were in the client to if they were in the server, supposedly making it easier to control who had permissions to do what.

Computer says no.

Turns out that this was a recipe for disaster, going back to the part where I talk about “maintain your own consistency, and let everybody else do likewise”, this is completely counter to that goal, the receivers in between the two worlds got fatter and fatter, and pulled more of the logic out of that world and the entities that were driving the game and made it once again hard to work out what was going on. We have a name for this in our general software world, an “anaemic domain model”.

Going back to the messaging aspect above, it ended up being much easier to push everything into the world (including score management etc) and have them as entities that could raise events, and handle other entities events and therefore contain their logic and guard their state more appropriately. To do this in a ‘safe’ manner, I’d just have two methods on an entity

- raiseEvent

- raiseServerEvent

And the entity component would be responsible for determining what it considered to be a special event that only the server could raise – the rest of the logic would be completely identical. As I’ve already suggested, having entities listen to their own events to react to change is a part of this – once raiseServerEvent is called on the server and the event automatically proxied through to the client, the code on the client carries on as if it was itself that raised the event.

This model looks something like this:

And is suddenly much easier to test and maintain – not to mention that now I have the possibility of running offline games by flicking a flag that allows server events to be raised locally. Perhaps a rename of the method to “raiseProtectedEvent’ ![]()

Next steps

My next steps are to go through the 10 issues written down on a pad of paper next to my desk, wait for the domain name to propagate and push it out onto the internet on its own domain name (without publicising it too much as my client code isn’t too optimal and more than 30 players will probably kill most browsers, oops). I’m pleased with the learning achieved with this project and it is a real joy to see that my time building software that isn’t games hasn’t been completely wasted (lots of principles carry across it seems, although it pays to be more mindful of your state).

I have some profiling to do too, I seem to spend 5% of my time in the garbage collector and 15% rendering particles and a whole lot of other things that really shouldn’t be sucking that much time. This will be fun and no doubt result in yet another blog entry about JavaScript performance.

And then onwards to the next game, I have some ideas about what challenges I want to set for myself in this space and a game that I want to build that will set them for me.