Most game programmers are familiar with the typical ‘game loop’. It’s usually broken up into two key stages, and looks like this:

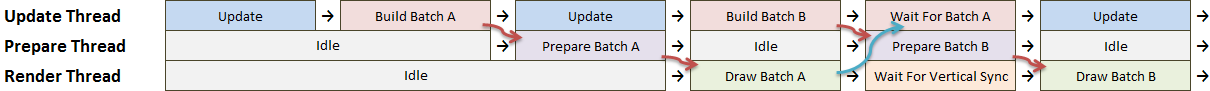

From a performance perspective, this is suboptimal, to say the least. We can never utilize multiple cores with this design, and we will be lucky to utilize 100% of a single core. In particular, vertical sync has a way of introducing long, useless pauses into our UI thread, like this:

The pedants among you may note that the GPU can cheat and help us out by moving that ‘wait for vertical sync’ pause to the beginning of the next redraw. This is true, but the problem is that we are still stuck executing serially on one thread. We have to be extremely lucky to hit 100% utilization on that CPU, and we’ll never utilize other CPUs.

Depending on the kind of game you’re building, you may be able to get some easy wins here – for example, let’s say you have enough objects that you can update them on two threads:

This is better. Now, the update work we’re doing can utilize two cores. Depending on your design, you can easily move up to 4 or 8 cores for your update code. You’ll notice that during a redraw, these threads have to sit idle, because there’s nothing else for them to do – the UI thread is using all the game state in order to draw, so no updating can occur.

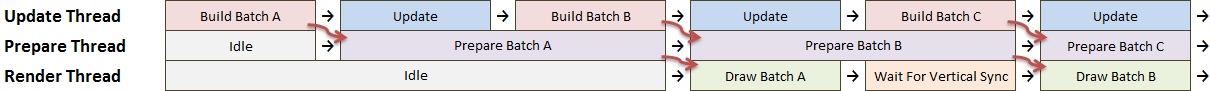

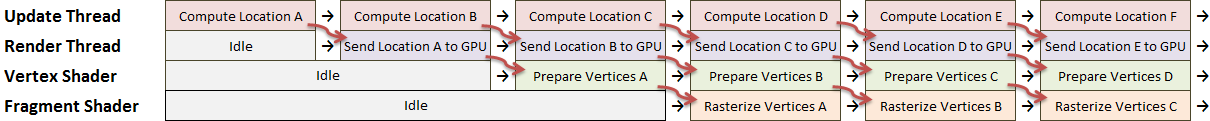

There’s a common solution for this sometimes known as ‘double buffered multithreading’, where you create two ‘buffers’ that hold copies of your game state. It looks something like this:

Double-Buffered Multithreading

(In these diagrams, a red arrow indicates that one thread asks another to perform work, and a blue arrow indicates that one thread wakes another thread up from a waiting state.)

We now have two distinct threads of execution that synchronize at specific points. In the example you can see red arrows indicating where the update thread tells the render thread to do work. Because we write to alternating buffers, the render thread can take as much time with Buffer A as it wants, and we can use that time to fill Buffer B. We only have to stop and wait when we need to update Buffer A again, at which point we need to be sure the render thread is done with it.

We can utilize multiple hardware threads to handle our update and render logic in this design – the ‘update thread’ in this example could be four threads working on update logic in parallel. We can do something similar with the render thread, though the threading restrictions in Direct3D and OpenGL mean that eventually we become single-threaded again.

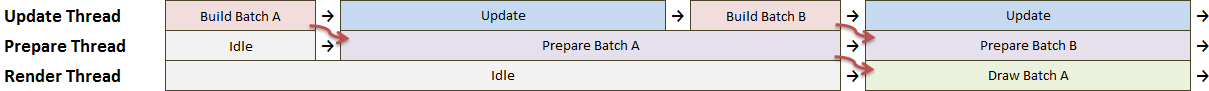

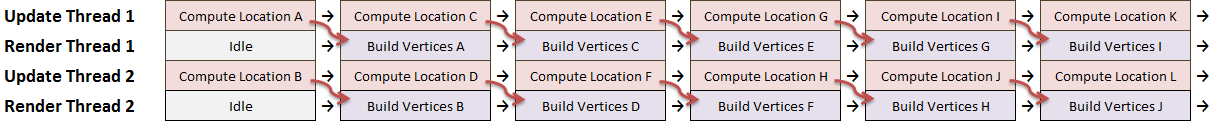

The ‘double buffered’ model is pretty easy to understand. I’m now going to describe an alternate model that has some distinct advantages. I’m going to call this the ‘batched model’:

Batched Multithreading

The concept behind this model is that each update is turned into a ‘batch’ full of rendering commands that can be sent to the video card. A batch contains all the information necessary to draw a frame, but does not contain any other game state. This makes it smaller than a full copy of the game state. Building a batch containing your rendering commands is actually quite simple, because it’s what already happens behind the scenes: Direct3D/OpenGL calls turn into batches of native rendering commands that are sent to the GPU.

At first glance, this model might seem worse. It’s certainly more complicated. Your game loop has become a pipeline, with three stages: Update, Prepare, and Render. In practice, however, this model works quite well. One of the key advantages is that the pipelines are independent. Preparing could take a long time, for example:

In fact, updating, preparing, and drawing can all take a long time, and things look pretty good:

With this model, each of your pipelines being independent means that correctly written code will allow each pipeline to utilize a full CPU, as long as the pipeline’s got work to do.

Performance Advantages

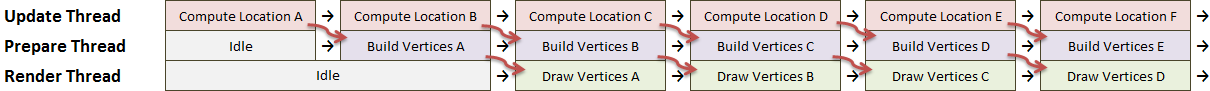

Even better, pipelines can run on multiple cores. Here is where the value of the ‘prepare’ stage comes in. In a normal single-threaded model, drawing a group of sprites would look like this:

If you’re observant, you’ll note that the only real input to the ‘build vertices’ stage is the location of the sprite (along with information that changes infrequently, like the size of the sprite). As a result, we can defer the ‘build vertices’ stage until later, or even do it on another thread. Even better, this results in each thread doing the same work repeatedly. When a thread is doing the same work over and over, the CPU’s instruction and data caches are better utilized, improving performance.

This model also provides some other natural advantages. For example, a common performance issue when writing Direct3D code is that changing states too frequently is expensive. If you change rendering states every time you draw an object, you pay a tremendous price for doing so and the GPU isn’t able to operate at peak performance. Using this pipeline model, you can build up a long list of drawing commands, and then sort them by rendering state – enabling you to minimize the number of state changes. The performance benefits from this can be tremendous.

Scaling Out Further

Speaking of GPUs, this model is also useful for taking advantage of the GPU to perform computation. In the sprite example above, you can trivially move the hard work from the ‘build vertices’ stage of the pipeline into a vertex shader running on the GPU. After doing so, things would look like this:

Furthermore, because each pipeline only relies on its inputs, you can take those inputs and split them into pieces and run the pipeline on multiple threads without synchronization. As long as the pipeline doesn’t hand its outputs to another thread before they’re finished, you don’t need locks and you don’t have to wait for other threads. Each pipeline pulls its inputs off a queue, processes them to produce its outputs, and shoves those outputs onto another queue. Most modern CPUs and GPUs are built in a similar pipelined fashion.

I hope this post has given you some ideas about how to scale your game up to make better use of multiple cores!